Naila Murray: Teaching machines to see

"If we really want to design truly intelligent machines, they're going to need to be able to form subjective opinions."

Teaching Machines to See

Computer vision is important work in the field of artificial intelligence. Improvements in how machines observe and interpret their surroundings could bring about the kind of technological developments that, until now, have been the stuff of sci-fi movies.

But will machines ever really be able to see? And what does seeing actually mean? Moreover, should machines try and replicate the neural processes humans use for vision – or is it better to start from scratch?

What is it to have sight?

Human vision is notoriously difficult to emulate. That’s why many computer vision experts have chosen to ignore the human visual system altogether. After all, couldn’t machines be designed to see the world completely differently – and in ways superior to those afforded us by the human eye?

But Naila, who grew up in Trinidad and Tobago, senses this thinking is no longer as prevalent in the computer vision community. Instead there’s been “a shift towards looking to the human vision system once again for inspiration”.

In fact Naila and her colleagues are trying to endow machines with human-like opinions about their observations – even trying to teach them what should be considered aesthetically pleasing and what shouldn’t. She also wants to help computers learn what they should be focusing on when they take in scenes.

It’s pioneering work in the field of computer vision, which has traditionally focused on semantic or objective details of an environment. “In the past we haven’t focused so much on what we call more subjective properties, such as aesthetics and visual saliency – what drives attention towards visual scenes,” explains Naila. “These types of properties go beyond objectivity, but they’re also very interesting. If we really want to design truly intelligent machines, they’re going to need to be able to form subjective opinions.”

Inspiration from photographers

To advance their work, Naila and her collaborators turned to an online community for photographers in which participants offer critiques on each other’s work. Naila wanted to leverage the opinions and expertise on the site to teach a machine or algorithm to offer critiques on pictures. For this, she used machine learning – a method of data modelling that enables computers to learn without being explicitly programmed. It’s a field that benefits from the huge volumes of available data in the modern world, as well as from ongoing increases in processing power.

Naila explains: “In the past, computer vision researchers might have programmatically provided expert knowledge to an algorithm on aesthetically pleasing image characteristics. Then the algorithm would try to use these characteristics to predict whether an image is aesthetically pleasing.” But with machine learning, computers can learn the insights needed independently.

“We simply supplied a learning algorithm with images and the critiques that accompanied them and asked it to replicate the critiques. We assumed that if 100 people agree that this is a nice image then we can be pretty confident that that’s the case.

“You can imagine that to assess a portrait photograph, the types of aesthetic judgments that would be applied would not be the same as if it was a landscape. If it was a photograph of a landscape one might focus on features such as complex composition and vanishing lines. In a portrait different rules would apply. These are things a machine would discover by sifting though many examples.

“But of course the aesthetic judgments are being created by human beings in the first place. So what we did find was that a lot of characteristics that are intuitive, or are already rules of thumb, hold true.”

A relationship with machines

Naila’s interest in computer vision can be traced back to her undergraduate degree in electrical engineering at Princeton University. “I was very interested in self-navigating machines, that is, machines that could operate in an environment autonomously, and in researching what it would take to create such machines. I moved on to doing a Masters in artificial intelligence and computer vision.

“Why? Because computer vision makes use of a lot of intuition about human visual perception but at the same time is quite challenging. Human perception allows us to perform tasks such as object recognition almost trivially, but getting a machine to the same level of recognition accuracy has turned out to be extremely difficult. Investigating which insights are successful for training machines to see and which aren’t is fascinating.

“For example, humans can correctly identify the colour of an object under many different lighting conditions. It may be bright outside. It may be dark. It may be foggy. But we’re still able to say with a very high accuracy what colours are present. For a computer this variability is extremely difficult to handle but our visual system is able to compensate for a lot of these environmental changes automatically.”

Deep learning

Learning about the human visual system gradually became a huge source of inspiration for Naila as she formed computer vision models as part of her PhD.

Moving towards deep learning, a sub-field of machine learning that involves multiple layers of signal processing, had been a natural choice for her. Naila explains: “Some deep learning methods use artificial neural networks, which are inspired by our brain’s visual system. The deep networks that are used in computer vision are very far from being biological models but certainly the basic inspiration, the hierarchical information extraction, is there.”

Naila is relying on deep learning to unlock another part of the visual puzzle for computers. She wants to help machines decide what they should be looking at in their field of vision. The importance of this area of machine-vision research is clear. For example, machines that need to auto-navigate environments in real-time need efficient algorithms to focus their visual attention. And just as humans prioritise visual focus to save brain-power for what matters, machines need to be able to recognise the visual stimuli most deserving of their finite processing power.

Directing a machine’s gaze

Naila explains: “Our eyes are constantly sampling our field of view and this is something that computer vision systems also do. Let’s imagine that a machine needs to track an individual through a video. If the video was taken outdoors the machine could probably safely ignore the sky and focus on the lower part of the image. What my colleagues and I have been doing is using deep-learning techniques to replicate the types of attentional patterns we need for such scenarios.”

“So we used a collection of eye-tracking data which was gathered while people looked at a series of images. We fed these images and the eye-tracking data to a deep-learning algorithm which trained a convolutional neural network to reproduce the patterns of attention. This was quite successful and the synthetised attention maps replicate the collected data pretty well.”

Naila has been looking into how this work can be applied in systems that help authorities to police car-pooling road-toll schemes. Her team is helping to establish how many passengers are travelling in a car by removing irrelevant background information, making systems more accurate.

Naila’s team is also helping to build an augmented reality application that would be especially useful for people driving unfamiliar vehicles. The mobile app allows users to scan the interior of a car so the function of particular buttons and switches pop up on screen. “If you have some idea of where people look in cars, for example the entertainment system or the dashboard, then the attention model can be trained to locate these areas and allow the app to quickly focus on areas likely to contain items of interest.”

AI experts unite

Naila sees the field of computer vision becoming increasingly dependent on inter-disciplinary collaboration between different artificial intelligence fields. Her group is already looking at the interactions between images and text with fellow researchers in natural language processing. A recent Facebook innovation that helps visually impaired users “see” images by describing them in a form that can be read out by a screenreader has been of particular interest to Naila.

“Right there you see a very obvious interaction between three things,” she says. “You have computer vision to understand what’s in the image, natural language generation to actually describe that in words and then speech generation to make an oral expression of that. It makes a lot of sense for these things to work in concert.

“There’s a lot of work in linguistics about how to represent speech, how to extract semantics and summarise. It turns out that a lot of ways of representing text can also be used fairly successfully to represent images.

“The computer vision field has always been very collaborative. I would say it’s becoming more important because we’re getting to a point of sophistication where we can start to tackle more complex problems with multiple angles.”

Teaching computers to think

Fundamentally, Naila and hundreds of other scientists and engineers in Xerox are trying to make computers more intelligent. So how far and how quickly does Naila see this intelligence progressing?

“We’ve gone through such a huge change in computer vision just in the past four years or so. Change can be so rapid that I would never say that in 20 years we won’t have seen something extremely exciting happen, although I’m not expecting the singularity any time soon.“

“I don’t like making predictions. But I’m always really interested in looking at the next thing. You can never have a project that’s finished when it comes to research, you always have the thought, ‘how can I improve this?’ or, ‘how does that translate into this situation?’ That tends to be what I look at when I look forward.

“One of the reasons I’m at Xerox is the company is committed to creating innovative solutions that make positive changes in people’s lives. It’s literally my job to think about ways to make that happen.”

We’ve all changed the world. Every one of us. With every breath we take, our presence endlessly ripples outwards.

But few of us have the opportunity to change many lives for the better. And even fewer are challenged to do so every day. That’s the gauntlet thrown daily at Xerox research scientists – to try and effect change.

In return, we give them time and space to dream. And then the resources to turn dreams into reality – whether they’re inventing new materials with incredible functions, or using augmented reality to bolster the memory of Alzheimer’s patients.

We’re proud of our Agents of Change in Xerox research centres across the world.

Статьи по Теме

Ксерографическая технология печати - Публикации в СМИ | Xerox Россия - Xerox

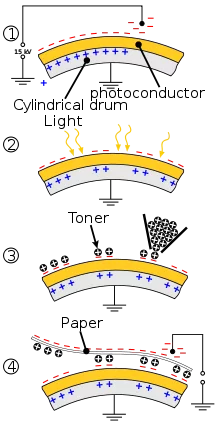

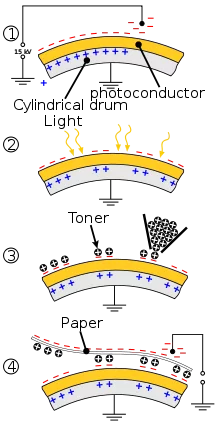

В современных принтерах тёмные части изображения наносятся лазерным лучом или светодиодной линейкой и тонер за счёт свойств барабанов, используемых в лазерной печати, «прилипает» к незаряжённым его участкам, а от заряжённых отталкивается.